Introduction

We looked at different ways companies were actively using containerization in the modern software development world. Deployment, scaling up, and management have increasingly become the greatest factors for success in today's software development industry. This solution transformed the way we transmit and execute applications and has been a main player in this shift. By the same thought, it has managed to simplify the running of applications in docker containers. Consequently, developers have been able to organize their applications so that they run smoothly in various environments.

What is Docker?

This tool allows users to utilize hardware resources others know nothing about. In other words, it is an open-source platform that automates the deployment of an application using virtualization. The containers are the heart of the system. In reality, they represent entire configurations along with anything else necessary to run an application. These containers can be used for development, testing, and production without changes.

Suppose you have a situation when you develop a program on your personal computer. Everything goes ideally. However, if you attempt to start it on another operating system, the program may exhibit some issues. The reason for this is that the operating system is slightly different, the required libraries may be missing, or there might be version clashes. Fortunately, this is where containerization comes into play. It hides the differences between infrastructures by placing the code not only in the container but also listing dependencies in a standardized form so that they are eliminated.

Key Benefits of Docker

- Consistency Across Environments: Docker containers encapsulate all the required components, ensuring that applications run the same way, no matter where they are deployed.

- Portability: Containers can be moved easily between different environments, whether it's your local machine, a data center, or a cloud provider.

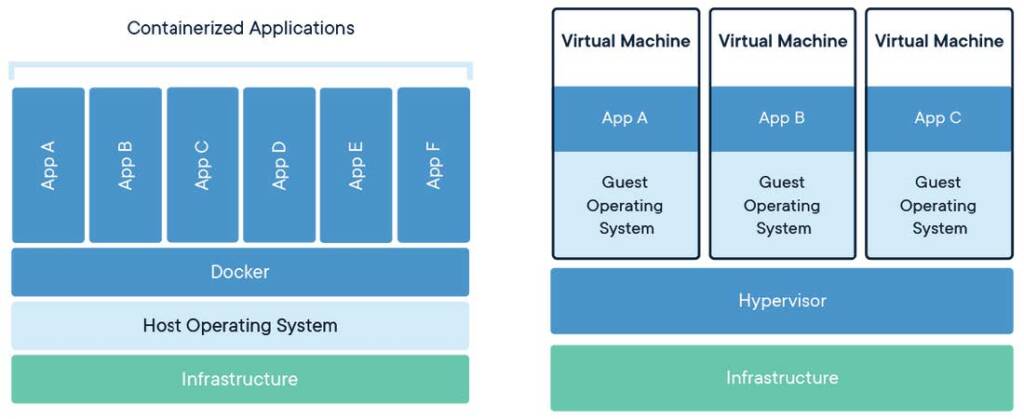

- Resource Efficiency: Unlike virtual machines that run full operating systems, Docker containers share the host machine's OS kernel, making them lighter and more efficient.

- Isolation: Applications run in isolated environments, reducing the risk of conflicts and improving security.

What are Containers?

Containers are the most essential element of the ecosystem. To put it simply, a container is a lightweight, standalone, and executable unit of software that incorporates everything that an application needs to run, for example, the code, on which the application depends, system tools, and the configuration file. The fact that the containers are stand-alone and isolated from the host system is what gives them their advantage—they provide security and stability to the applications.

How Do Containers Work?

Containers and traditional virtual machines (VMs) are different in that containers don't need a whole operating system for each instance and instead share the OS kernel of the host machine. Therefore, containers have the ability to boot much cleaner and useless system resources than VMs out there. For instance, when running a Node.js application in a Docker container, the container has the Node.js runtime and any libraries the application is using. Because the containers are isolated, you can have various releases of Node.js running at the same time on the same node without having disturbances.

Advantages of Docker Containers

- Efficiency: Containers are lightweight and less resource-intensive as they use the same OS kernel as the host system and, therefore, are more efficient than traditional VMs.

- Isolation: Containers provide process isolation, which means that if one container fails, the rest that is currently running on the same host will create no disturbance to others.

- Scalability: Docker containers can be easily replicated across multiple hosts or scaled up as traffic increases. Tools such as Docker Compose and Kubernetes remain in charge of these operations.

- Flexibility: You can execute a server that is running more than one container, each of them with its unique version of an application or framework, which will, therefore, prevent any conflicts to occur when different versions or dependencies are requested.

These containers are popular because they ensure a consistent environment from development to production, solving the common issue of "it works on my machine" by making sure it works the same everywhere.

What are Docker Images?

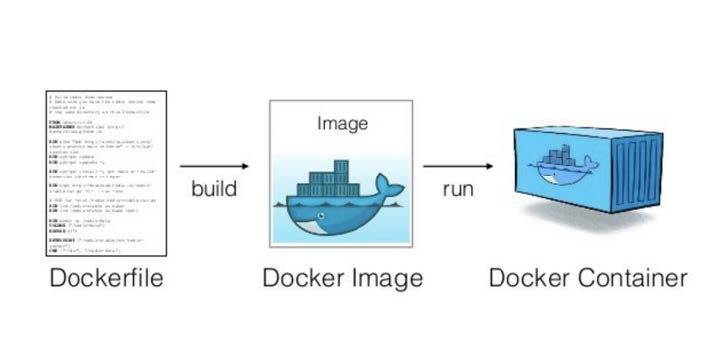

Whereas running states are the execution of an application, the image represents the blueprint from which these states are derived. In this context, an image is the readonly template used to build a container, with a set of instructions. For example, the images include everything needed to run the application: the code, configuration files, environment variables, libraries, and any dependencies.

Layers in Docker Images

Images consist of layers. Each instruction in a Dockerfile creates a new layer. This is helpful because it allows for reusable layers, speeding up the build process and cutting down on duplication. If there are some layers common to two images—for example, the operating system is the same or the runtime environment is the same—those layers will be reused. This layering system makes images efficient and modular. You do not have to rebuild the whole image to update your application; it can just update it by updating those changed layers.

Developers often make use of images, which are located in a central and authoritative place that harbors images shared by the community and organizations. For example, you can easily find an official image for any of the most popular software: Python, MySQL, or Nginx. Such images are a starting point for your applications, but all the complexities of the underlying dependencies are not your responsibility to work on. If your application has special needs, you might write a Dockerfile to create a custom image from scratch.

In short, a Dockerfile is just a simple text file that contains a list of instructions that are followed in order to build your image. You can then share the image with others or deploy to production directly from there.

Docker Files:

A file is a simple text file containing a series of commands (instructions) used to assemble an image. It automates the process of creating an image by specifying all the necessary steps, such as installing software, copying files, setting environment variables, and configuring services.

Common Instructions in a Dockerfile:

- FROM: Specifies the base image (starting point).

- RUN: Executes commands to install software or dependencies.

- COPY / ADD: Copies files from your local system into the image.

- WORKDIR: Sets the working directory inside the container.

- EXPOSE: Declares which network port the container will listen on.

- CMD / ENTRYPOINT: Defines the default command or script to run when the container starts.

Docker File Example:

Explanation:

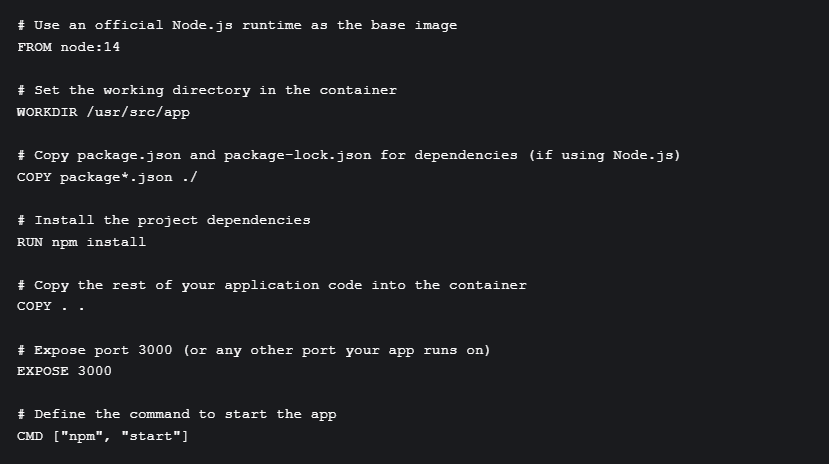

In the root of your VS Code project, create a file named Dockerfile with the necessary code.

- The node:14 image already has Node.js installed, so you don't need to manually install Node.js in the container, you can adjust it to other languages if needed.

- The WORKDIR instruction specifies a directory inside the container that will be used as the current working directory for any subsequent commands in the Dockerfile. If the specified directory (/usr/src/app in this case) does not exist, Docker will create it automatically.

- This copies the package.json and package-lock.json files to the working directory.

- The install command is used to install all the dependencies listed in your package.json file.

- Copy command is used to copy all project files into the container.

- EXPOSE 3000 instruction is important because it informs users of the Docker image which port your application will listen to for incoming connections. Example: If you have a web application container exposing port 3000, another container can access it via http://:3000.

- CMD ["npm", "start"] in the Dockerfile specifies that when the container is run, it should execute the npm start command, which starts the application.

Building the Docker Image:

To build the Docker image from the Dockerfile, you would run:

docker build -t img-name .

Running the Container:

docker run -p 3000:3000 --name my-node-app-container img-nameFew Docker Commands:

- docker pull [image_name]

- Explanation: Downloads a Docker image from Docker Hub (or any specified registry) to your local system. It%E2%80%99s the starting point for running containers.

- docker run [image_name]

- Explanation: Runs a Docker container based on the specified image. If the image isn't already downloaded, Docker will pull it first.

- docker ps

- Explanation: Lists all currently running Docker containers. You can see container IDs, names, statuses, and more.

- docker stop [container_id]

- Explanation: Stops a running container by specifying the container ID or name. This is useful for shutting down containers without deleting them.

- docker rm [container_id]

- Explanation: Removes a stopped container. This helps free up resources and avoid clutter in your Docker environment.

Conclusion:

Docker has revolutionized software development with lightweight containers that simplify deployment and ensure consistency across environments. Its efficiency has made it essential for modern DevOps and cloud-native applications.

Looking ahead, Docker's advancements in Kubernetes integration, edge computing, and security will continue to shape scalable and automated solutions. To explore more, visit the Docker official website.